As a data strategist, my primary focus is on designing high-level AI solutions, with the technical implementation typically handled by other specialists. However, sometimes I get the chance to dive into the trenches myself, building quick proof-of-concept AI prototypes. These small-scale projects might include sentiment analysis APIs, recommendation engines, or automated classification systems that allow clients to experience the potential of AI for their business before committing to a major investment.

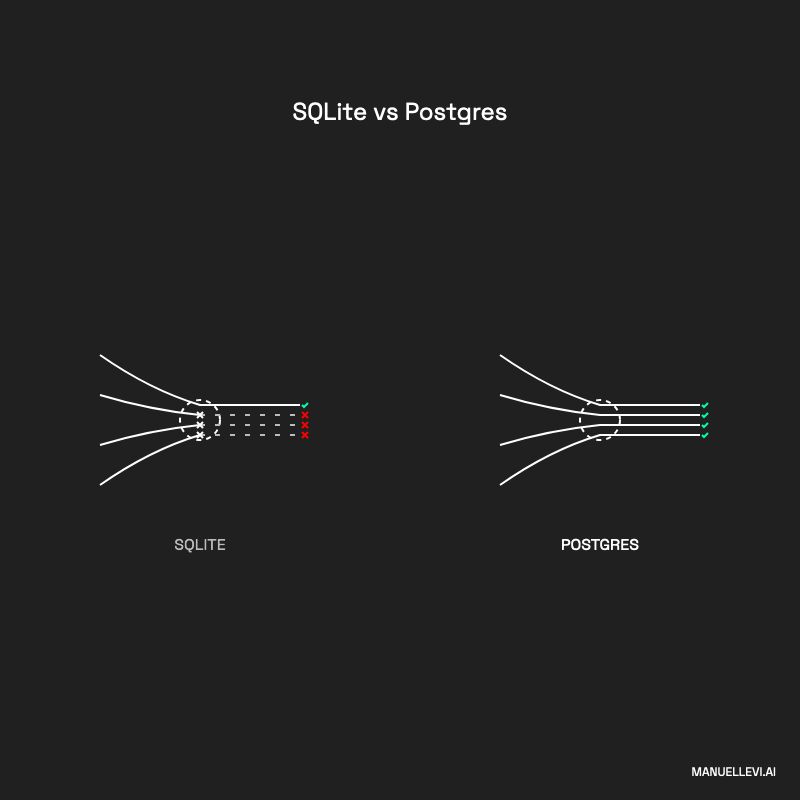

For rapid prototyping, I often rely on Django paired with SQLite. SQLite’s lightweight and easy setup makes it perfect for quickly testing ideas and validating concepts - you can train a model, store its predictions, and demonstrate results all in one file. But as we prepare for production, where scalability, data sharing, and concurrent access are crucial for serving real-time predictions, SQLite’s limitations become apparent. That’s when PostgreSQL steps in as a robust, open-source database built to handle production-level ML workloads.

PostgreSQL provides several critical features for AI applications:

- Scalability for handling large training datasets and prediction results

- Native JSON support for storing flexible model metadata and parameters

- Full-text search capabilities for training data preprocessing

- Reliable concurrency handling for simultaneous model training and inference

- Array data types ideal for storing feature vectors and embeddings

- Built-in support for statistical functions useful in model evaluation

These capabilities make PostgreSQL an excellent choice for deploying high-performance, AI-driven applications in data science. While it may not be the only option, and people will try to make it more complex than it has to be, it’s more than sufficient for many use cases.

In this guide, I’ll walk you through the process of migrating a Django project from SQLite to PostgreSQL—helping you get ready for production and scale your AI application smoothly.

Removing Django Old Migrations

Important Pre-Migration Steps

⚠️ Backup your database and commit your code before starting this process. This is especially critical for AI projects where you may have valuable training data or model results stored in your database. This migration preparation involves deleting specific files and clearing database records. Having backups will allow you to easily revert changes if anything goes wrong and protect your AI development work.

Step-by-Step Guide to Safely Clearing Migrations

To safely delete old migration files in each app’s migrations folder without affecting other parts of the project, I recommend going through every app and removing its migration files.

You can do this by going to the root directory of your project and running:

find ./your_app_name/migrations/ -type f -name "*.py" ! -name "__init__.py" -delete

⚠️ Repeat this command for each app in your project. This ensures that only migration files within the specific app’s migrations folder are deleted.

Deleting SQLite DB

If you’ve just been prototyping and you don’t care about your database - I recommend that you don’t - and have an easy process to repopulate it - go ahead and just delete your db.database, db.sqlite or equivalent file. This is often the case with AI prototypes where you can easily regenerate predictions or reload training data from source files. However, if you have stored model evaluations or user feedback data, make sure you have exports of these before proceeding.

Note: Fixed the typo “you” to “your” in reference to the database file.

Generate New Migrations for PostgreSQL

Now, create a fresh set of migrations that reflect the current state of your models.

python manage.py makemigrations

Apply the New Migrations to the SQLite Database

Wait, shouldn’t we be doing this for the Postgres database, not the SQLite? Sure, we’ll get there, but first let’s test on the SQLite. This staging approach is particularly valuable for AI applications where:

- Your database schema may include complex tables for model versioning

- You might have large tables for storing embeddings or feature vectors

- Migration rollbacks could be costly with production training data

By testing with SQLite first, if you mess up, you can simply delete the database, fix the bugs, and restart. Once you’re working with a remote database containing real model data and predictions, it becomes a much more complex problem.

Run python manage.py migrate

Finally, Update your settings.py

Now you need to go into your settings.py and update the DATABASES variable accordingly.

If you’re using a digitalocean database, this looks something like this:

DATABASES = {

'default': {

'ENGINE': 'django.db.backends.postgresql',

'NAME': 'your_database_name',

'USER': 'your_database_user', # Fixed typo from 'your'

'PASSWORD': 'password',

'HOST': 'xxxxxxxxxxxx.a.db.ondigitalocean.com',

'PORT': '25060',

'OPTIONS': {

'sslmode': 'require',

# Performance optimizations for AI workloads (optional)

'client_encoding': 'UTF8',

'default_transaction_isolation': 'read committed',

'timezone': 'UTC'

},

}

}

Start migrating

Now, you should be able to just run python manage.py migrate, but often, if you just created a new database and user, this won’t be enough.

Common Errors:

Not enough permissions django.db.migrations.exceptions.MigrationSchemaMissing: Unable to create the django_migrations table (permission denied for schema public LINE 1: CREATE TABLE "django_migrations" ("id" bigint NOT NULL PRIMA...

You just created the user and database so, now you have to give it permissions! Right now, that user can’t do anything.

Log into your SQLServer and run the following commands:

/* grant the necessary privileges to your database user */

GRANT ALL PRIVILEGES ON DATABASE your_database_name TO your_database_user;

/* that user needs permission to create tables in the public schema specifically */

GRANT ALL ON SCHEMA public to your_database_user;

Or, for production AI systems, consider these more granular permissions

GRANT SELECT, INSERT, UPDATE ON ALL TABLES IN SCHEMA public TO your_database_user;

GRANT USAGE, SELECT ON ALL SEQUENCES IN SCHEMA public TO your_database_user;

These allow model inference while preventing accidental deletions

Conclusion: Ready for AI Production

After completing these migration steps, your Django application is now backed by PostgreSQL and ready for production AI workloads. You’ll benefit from:

- Efficient storage and retrieval of large training datasets

- Reliable handling of concurrent model inference requests

- Built-in backup and replication for protecting valuable ML assets

- Robust transaction support for maintaining data integrity during model updates

- Advanced querying capabilities for analyzing model performance

Remember that while SQLite served us well for prototyping, PostgreSQL provides the foundation needed to scale your AI application with confidence. Whether you’re serving real-time predictions or storing massive embedding vectors, you now have a database that can grow with your needs.

If you found this guide helpful, don’t forget to configure your PostgreSQL instance for optimal ML workload performance and regularly monitor your database’s performance as your AI application scales.